When it comes to on-site optimization, using an SEO crawler is a must. There are hundreds of SEO tools available nowadays. However, only a few are worth a try. Netpeak Spider is one of them.

Netpeak Spider is an effective desktop tool for auditing websites and finding various types of issues. It can be used to check a site’s structure, find broken links, analyze on-page elements, and much more. With its user-friendly interface and intuitive controls, Netpeak Spider is a valuable tool for any webmaster or SEO professional.

What is Netpeak Spider?

Netpeak Spider is a desktop SEO crawler that scans each page of a website and detects issues preventing it from getting high rankings in search. In short, you enter a website URL in the tool, select what parameters you want to check, and get the results.

The tool is essential for everyone working on website optimization: SEO teams, webmasters, bloggers, link builders, web developers, QA teams, content marketers, even your sales team can use it successfully.

Analyzing broken links, incorrect redirects, duplicate meta tags, internal linking, is only 1% of what Netpeak Spider is capable of. Here’s what you can do using the tool in a couple of words:

- Collect all pages from a website and check them by more than 50 SEO parameters

- Detect more than 50 on-page SEO issues and learn what needs to be fixed first and how

- Analyze all incoming/outgoing internal links

- Retrieve full website structure

- Calculate internal PageRank (relative weight of each page from a website)

- Generate and validate sitemaps

- Have a detailed look at HTTP headers and source code

- Scrape almost any data from any website using advanced parameters

- Filter, sort, and segment data in the results table

- Export more than 70 reports, including the Express Audit of the Optimization Quality in PDF

- Create white label PDF reports for clients.

- Analyze hreflang issues on your multilingual website .

- Simultaneously crawl a list of domains.

- Enrich your technical SEO parameters with Google Analytics and Search Console data.

- Configure HTTP request headers.

As you can see, it’s a multitasking tool which provides with the most profound insight into your on-page SEO. Let’s have a detailed look at its features.

How to Use Netpeak Spider to the Fullest Extent

I could debate over Netpeak Spider pros and cons till the cows come home, but let’s just see how it works instead.

Launching the Tool

Since Netpeak Spider is a desktop tool, you obviously need to download it on your computer. Don’t worry, you won’t need to download a new version each time it comes out, the tool updates automatically.

You need to download Netpeak Launcher which is kind of like Steam or Origin. It includes two tools from Netpeak Software, one of which is Netpeak Spider. Sign in using the credentials you used to register on the website and download the tool. Since then, you will be able to start the spider directly.

At first, the tool may seem quite technical, but it’s really straightforward. After you have successfully started Netpeak Spider, it’s time to choose parameters you want to check.

How to Set Crawling in Netpeak Spider

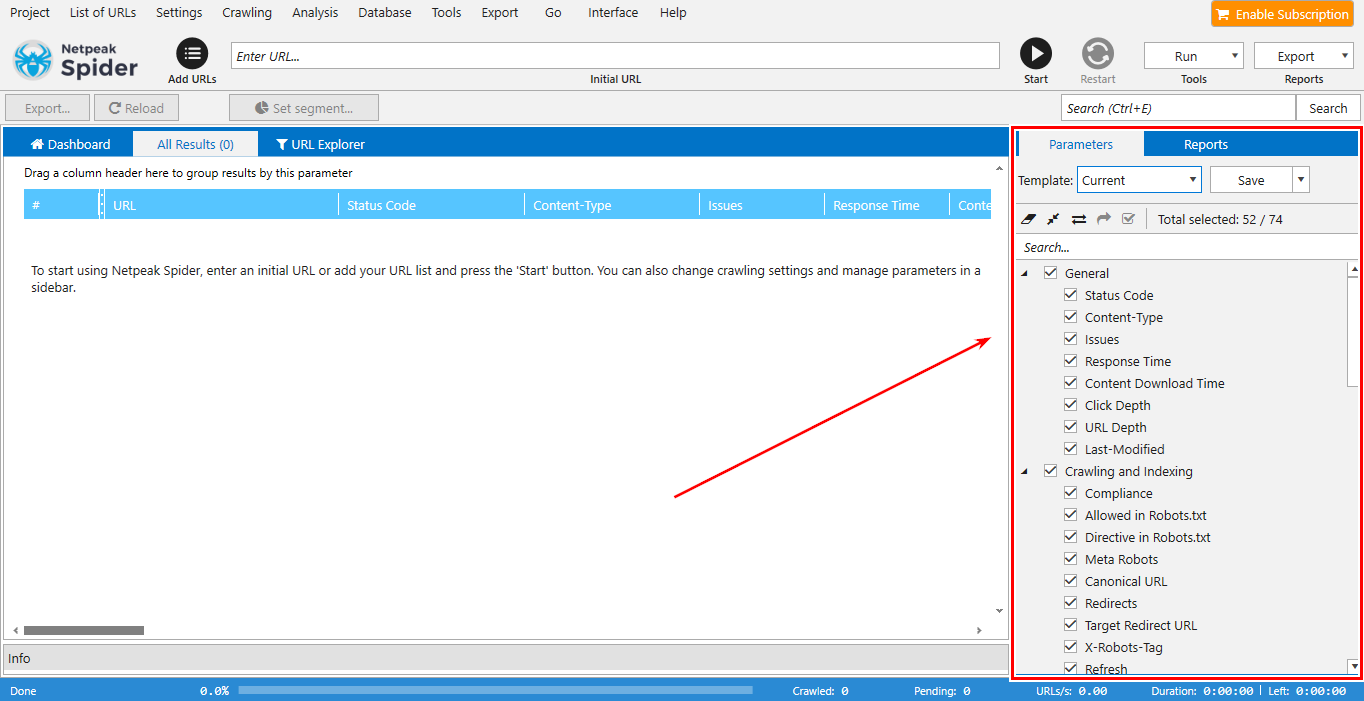

Go to the ‘Parameters’ tab in a sidebar. Here you will see all the 54 parameters you can check in Netpeak Spider.

The most crucial feature that differs the tool from its competitors is that you can select specific parameters you want to analyze…yes you hear me well 😎

For instance, if you only need to analyze site content or internal links, you can select all parameters from the corresponding group and disable all the others. This will significantly speed up the crawling process and free you from manual filtering at the end.

If you are an SEO specialist, you know that certain stuff requires checking a set of parameters. That’s why you can create custom templates that will include specific parameters for the corresponding tasks…sweet! 😋 Create them once and then you will need a single click to change them according to your task.

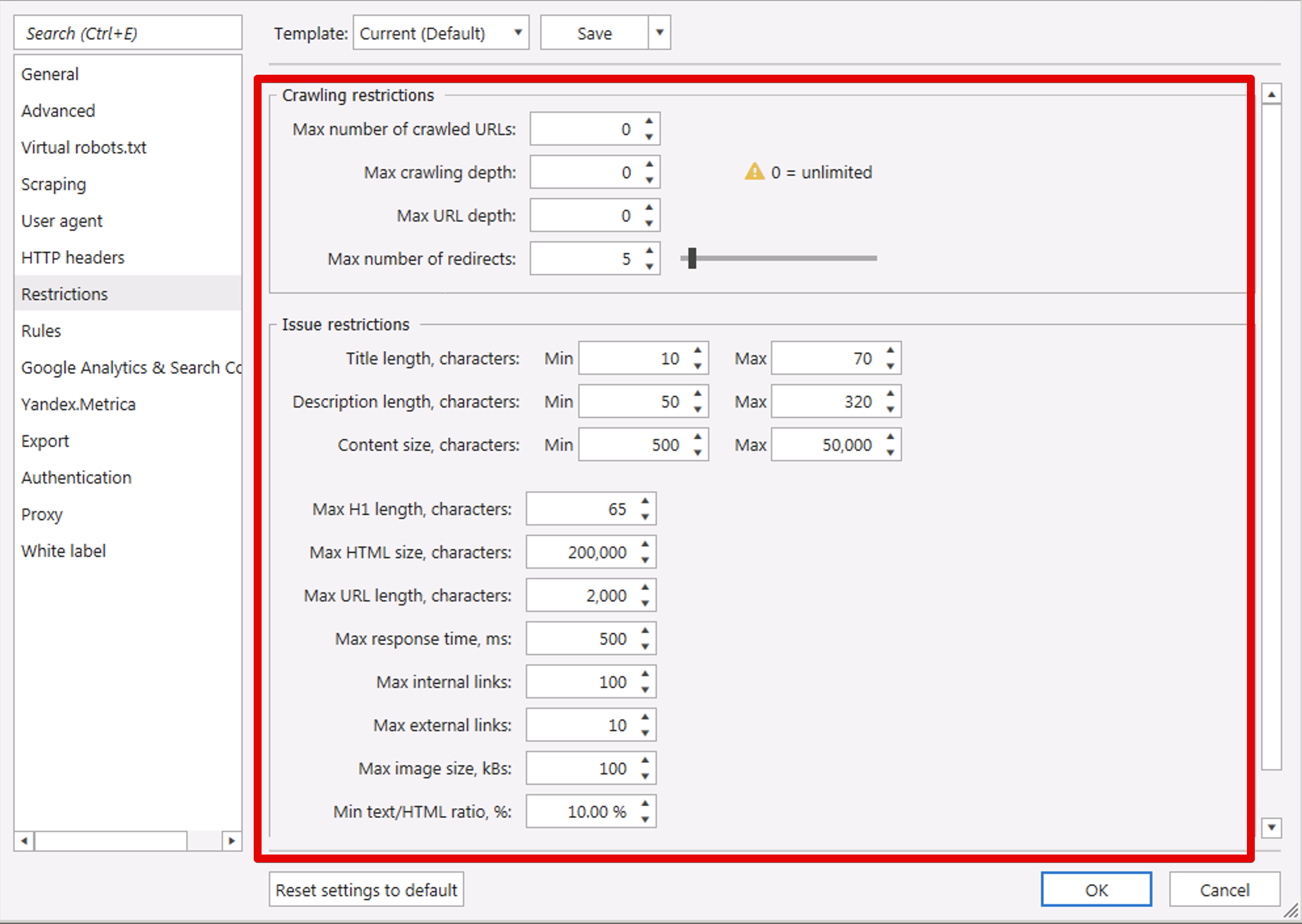

There’s more customization ahead. On the Restrictions‘ tab of crawling settings, you can set custom issue restrictions. That means if you don’t agree that the response time of 1 second has to be defined as an issue, you can set your own max limit. Lovely ☺️

Crawling Settings

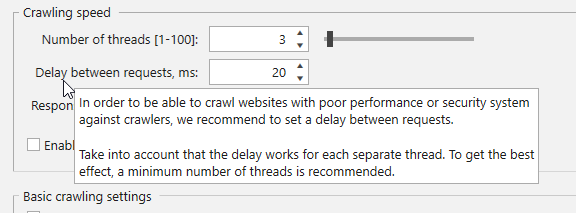

Just like parameters, crawling settings are highly customizable. It’s easy to get lost in numerous checkboxes. Fortunately, each setting has an informative tooltip describing its purpose.

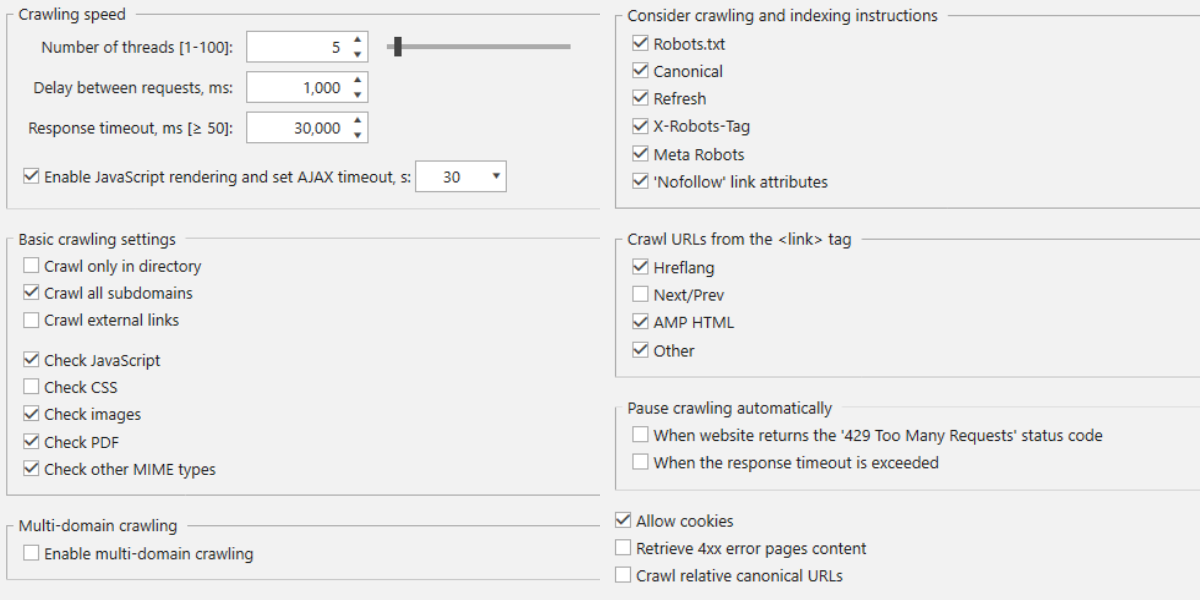

The first two setting tabs called ‘General’ and ‘Advanced’ contain all the main crawling settings.

You can set up:

- Crawling speed (a number of simultaneous threads, the delay between requests, maximum response timeout).

- JavaScript rendering (essential for crawling sites using JS technology)

- Crawling settings (to crawl only an exact website directory, crawl all subdomains, crawl external links, and checking of different file types)

- Considering crawling and indexing instructions (robots.txt, meta robots, X-Robots-Tag, etc.)

- Crawling URLs from the <link> tag

- Other stuff like pausing, using cookies, and crawling of relative canonical URLs

- Enable multi-domain crawling (essential for crawling different domains simultaneously).

Ohh yeah 🤩

User-Agent

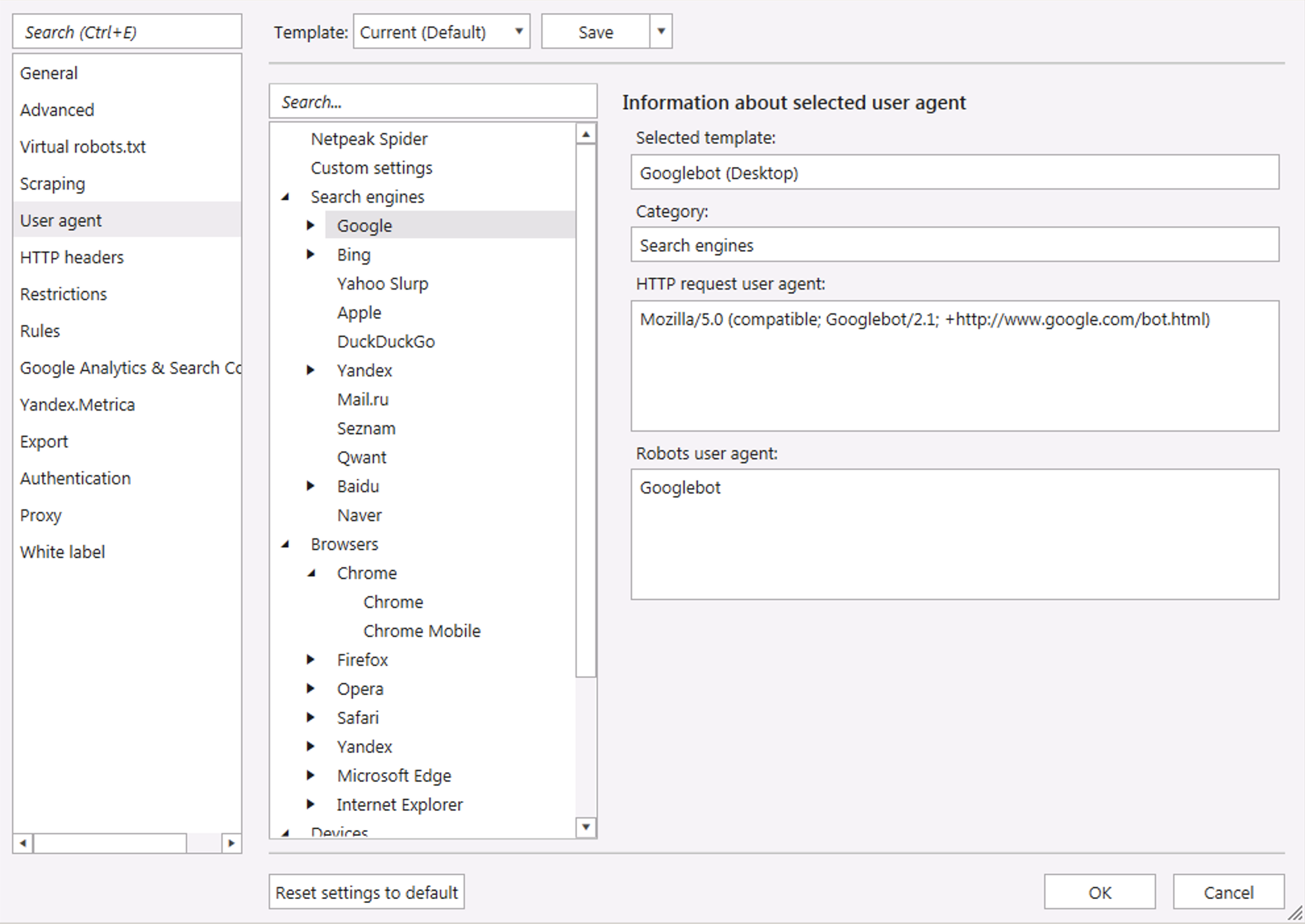

Select the user-agent you want to use. User-agent is a field in the HTTP request which identifies a web crawler. Don’t want competitors to notice you crawling their site? Simply change your user-agent to Googlebot.

In Netpeak Spider, you can use more than 70 user-agents of popular browsers, search engines, and devices. By the way, you can also add a custom one.

User-Agent Settings

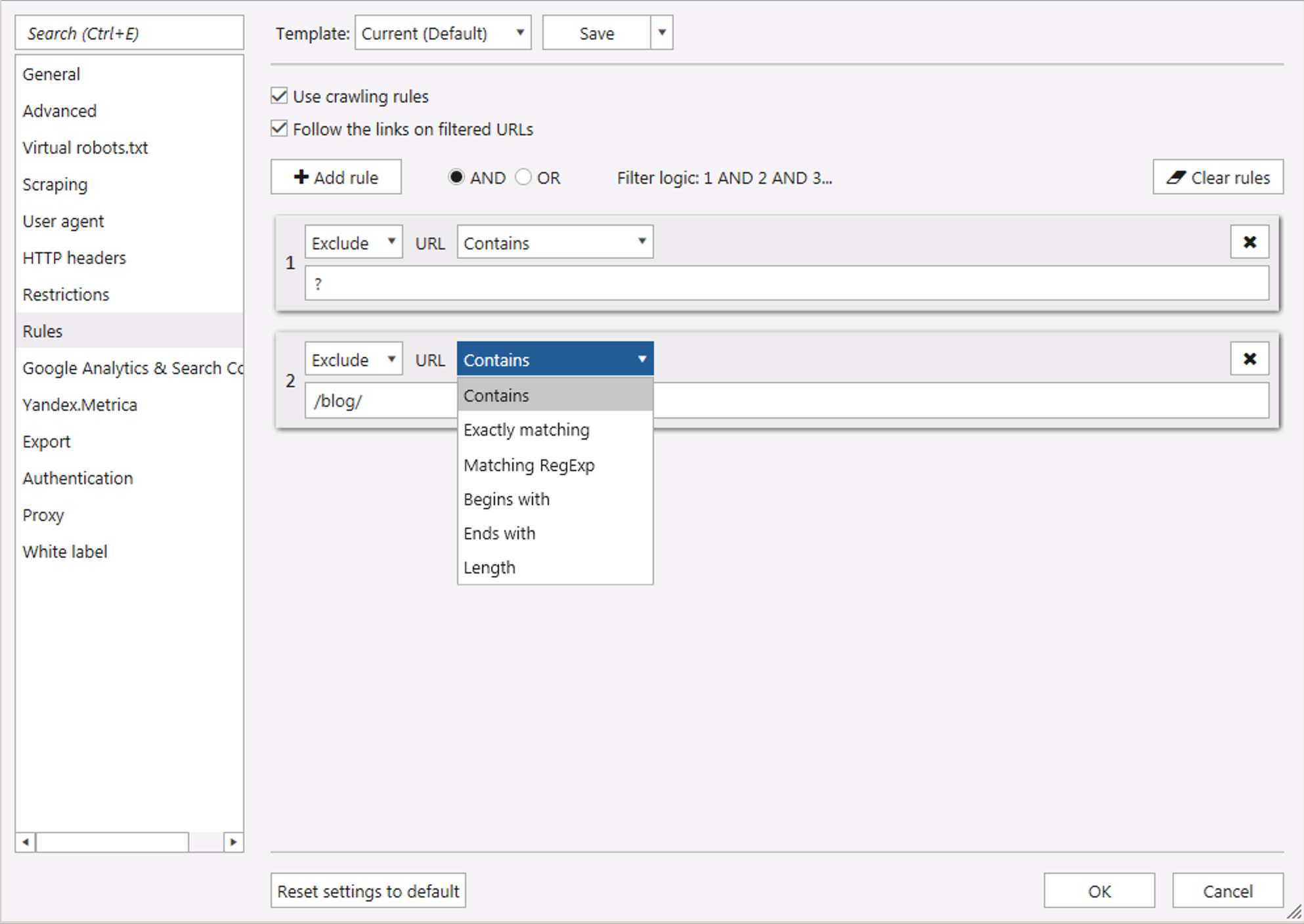

Custom Crawling Rules 🧐

Don’t want to crawl parameter pages or want to crawl only pages from the certain category? Use crawling rules to guide Netpeak Spider to crawl only URLs that match the patterns you set.

ILS a.k.a I Love Scraping

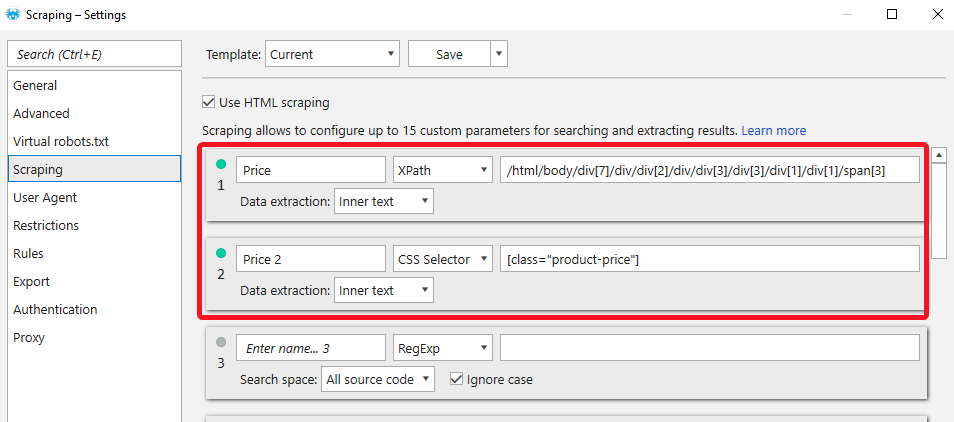

Want to find and extract data like prices, phone numbers, pieces of text or code, from a website? Then you should use the scraping feature. The tool uses four types of web scraping: by XPath, CSS selector, RegEx, and content. If you have some basic knowledge of CSS selectors and XPath syntax, you can extract almost anything from anywhere.

Let me show you how you can easily extract prices from the competitor’s website:

- Go to the product page and find the price tag.

- Right-click on it and click ‘Inspect’. This will show you how the price looks like in the source code.

- Now you have several options. The first one is the simplest: right-click on the price element → ‘Copy’ → ‘Copy XPath’. In most cases, this will work with no hassle.

To do it wisely, you should find a unique identifier of the price and use it as a scraping condition. In our case, the price is located in the class called “product-price”. After wrapping it using CSS selectors syntax, the scraping condition should be → [class=”product-price”].

4. Let’s try to scrape using both methods. Go to the ‘Settings’ tab → ‘Scraping’. Enable HTML scraping and enter the scraping conditions you got in the previous step.

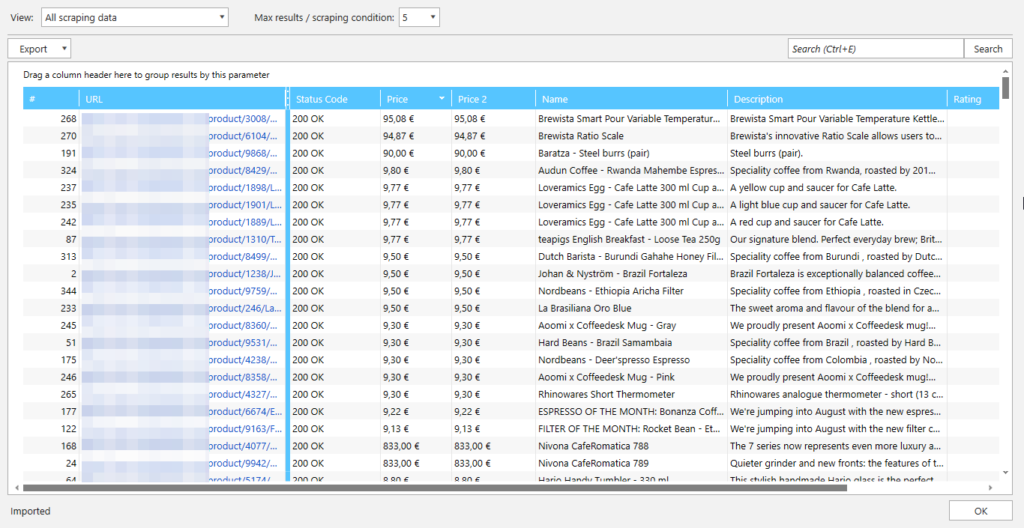

5. Enter website URL and start crawling. You can disable all the unneeded parameters to speed up crawling (make sure scraping is enabled). If you want to scrape product prices from a particular category, use crawling rules.

6. Here you go. When crawling is complete, go to the ‘Scraping’ tab in a sidebar and click ‘Show all results’.

As you can see, both methods worked like a charm. I also scraped the product name, description, and rating.

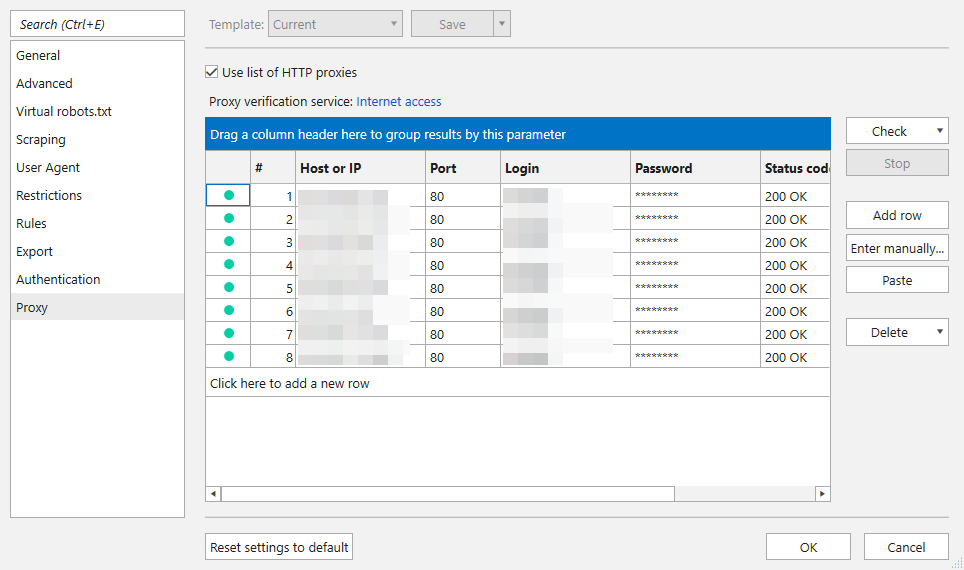

Proxies

Got your IP blocked by the website you want to crawl or want to go undetected? Then you need proxies. Netpeak Spider has nice proxy manager that allows adding a list of proxies, verifying their connection and status.

It’s handy to see which proxies are dead or blocked when you use dozens of them.

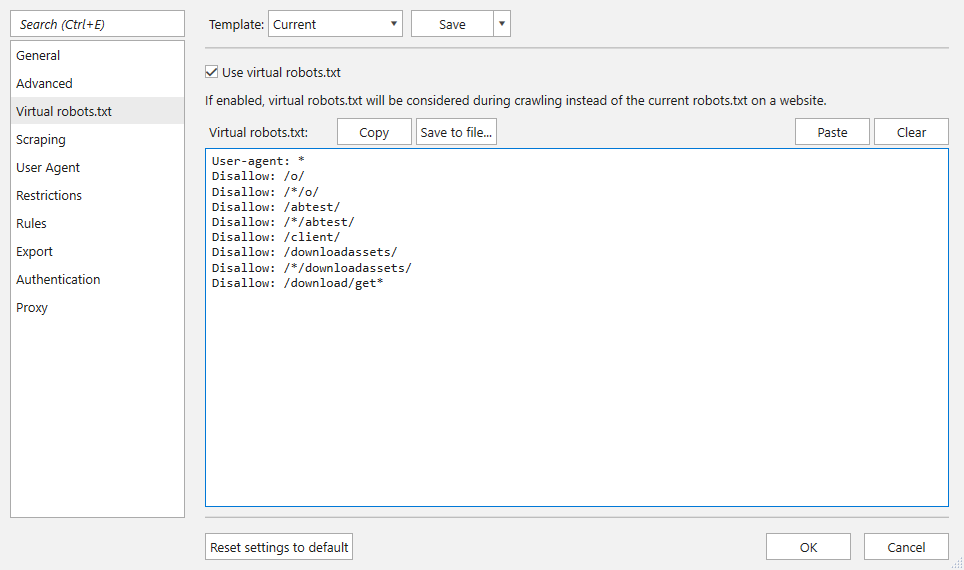

Virtual Robots.txt 🤖

Another cool feature you may not use regularly but will appreciate once you need it is virtual robots.txt. It allows crawling a website using custom robots.txt file instead of the one on the site. It’s extremely useful when you need to tweak crawling instructions and want to test them first.

That’s it. Once you finished with the settings, paste the website URL into the address bar and hit the ‘Start’ button.

By the way, if you already have a list of URLs you want to crawl you can paste them straight to the main table or upload from a file.

Analyzing Crawling Results in Netpeak Spider

When the tool has successfully finished crawling, you are left face-to-face with tons of data. Fortunately, Netpeak Spider not only provides you with raw data but allows getting real insights into on-page SEO.

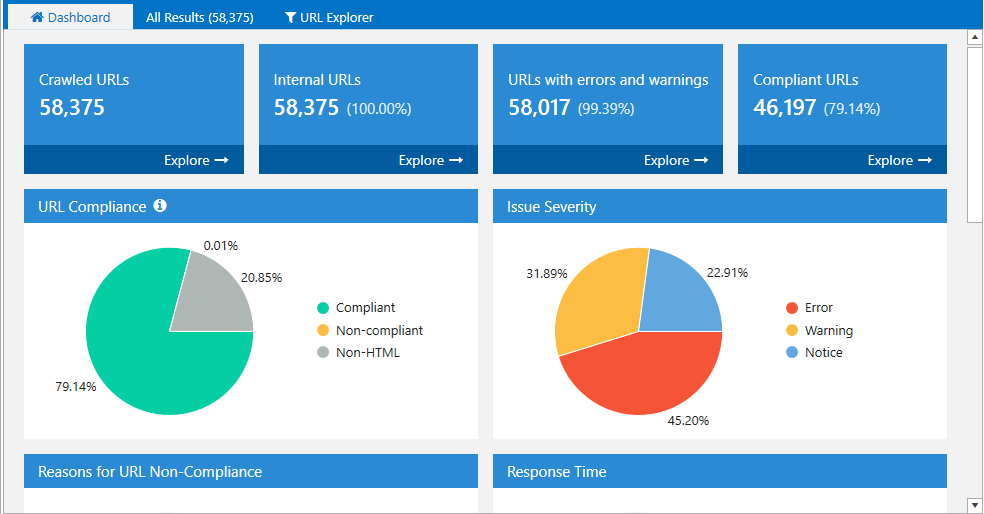

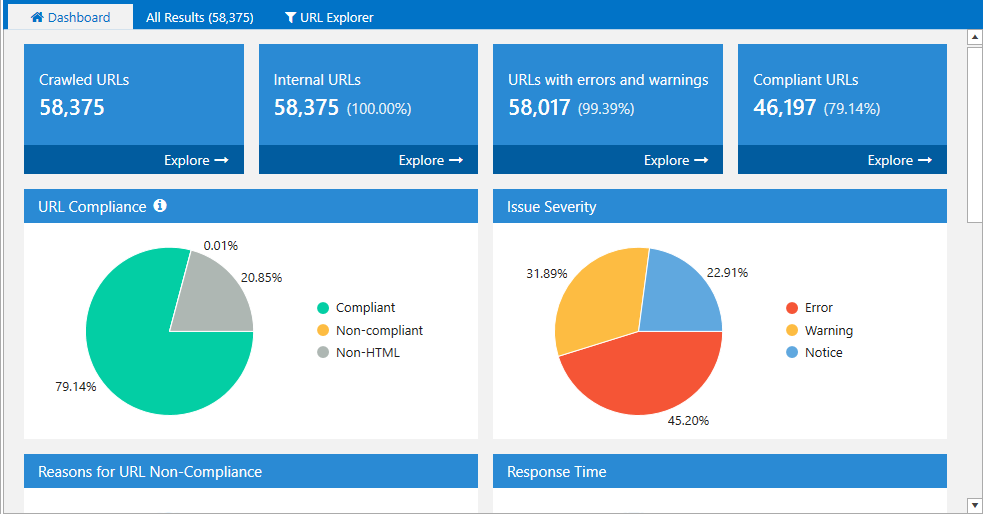

Dashboard

The first thing you will pay your attention to is probably the dashboard. At the very top it shows:

- Number of crawled URLs

- Number of internal URLs

- Number of URLs with errors and warnings

- Number of compliant URLs

Then there are eight blocks with a visual representation of the key data the percentage of compliant URLs, issue severity, status codes, number of URLs by click depth, etc.

What’s great is that the dashboard is interactive. Just click on any parameter, and you will see pages filtered accordingly.

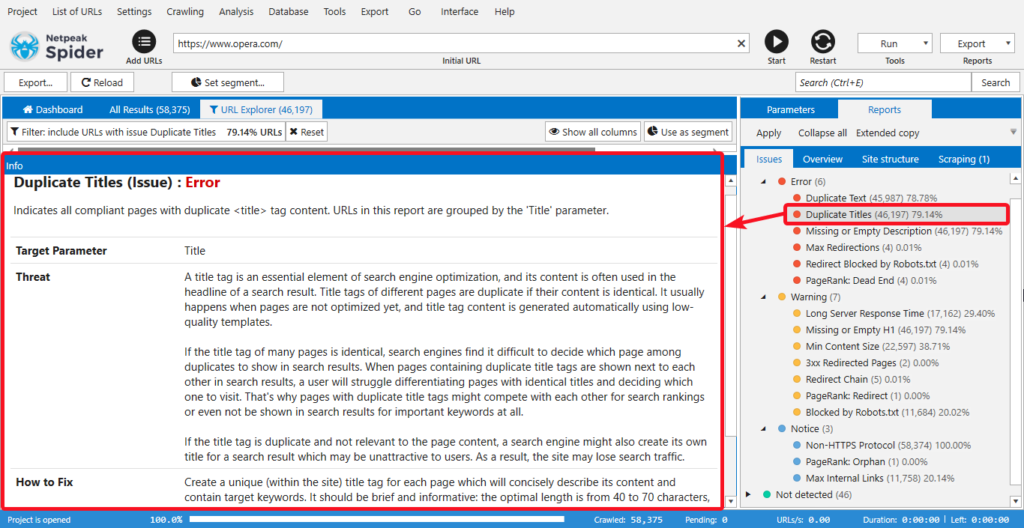

Issue Categorization

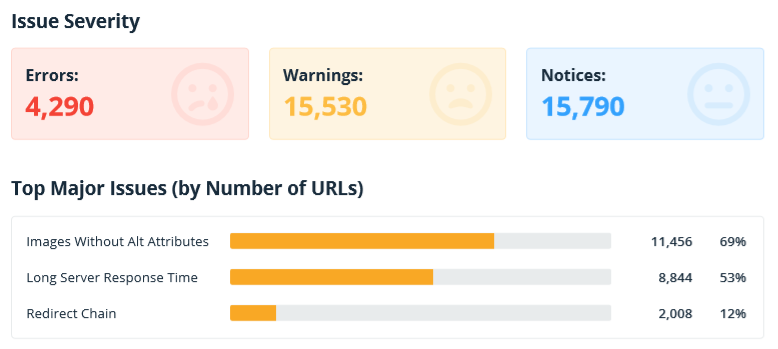

Netpeak Spider divides all issues into three groups according to their severity:

- Errors

- Warnings

- Notices

This is especially useful for non SEO pros. You can get an idea of what issues have to be addressed first.

Moreover, each issue from the list has a detailed description. Click on any issue from a sidebar and check the ‘Info’ panel at the bottom. There you will find what the threat the issue poses is, and how to fix it.

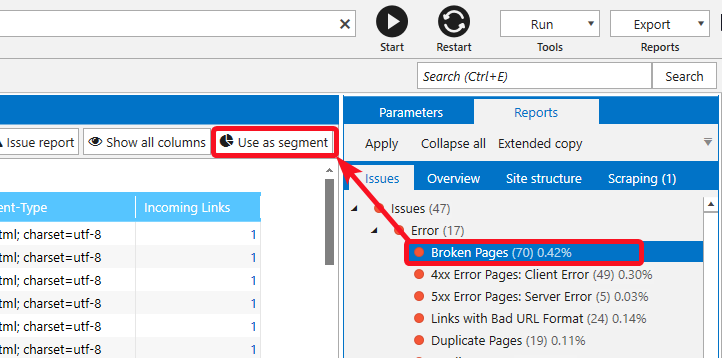

Results Filtering, Grouping, and Segmenting

Working with significant amounts of data is a pain in the ass. It’s a piece of cake to overlook vital insights in a disorderly results table. The results table in Netpeak Spider is exceptionally flexible and allows sorting data, grouping results by certain parameters, and creating data segments…huge feature.

How to filter results:

- Click on any issue in a sidebar to see only pages containing it

- Click on any parameter from the ‘Overview’ tab to see pages matching it

- Click on any website part from the ‘Site structure’ report to see pages from corresponding part

- Click on any value on the dashboard

- Right-click on any value in the results table and select ‘Filter by value’

- Set several custom filtering rules.

All the filtered results are shown in a separate tab, so the main one remains unchanged.

Different Ways to Filter Results in Netpeak Spider

To group the results by the specific parameter, just drag and drop it slightly above the table. You can use multiple grouping parameters.

The icing on the cake is the segmenting feature. You can set any filtered data as a segment and the whole tool will change accordingly. This feature is a gift from above for those who work with big projects. This way, you can focus all your attention on a specific part of the website.

Database

Not to overload the main table, specific results such as outgoing and incoming links, images, redirects, h2-h6 headings, are stored in a database. You can check them by going to the Database‘ tab.

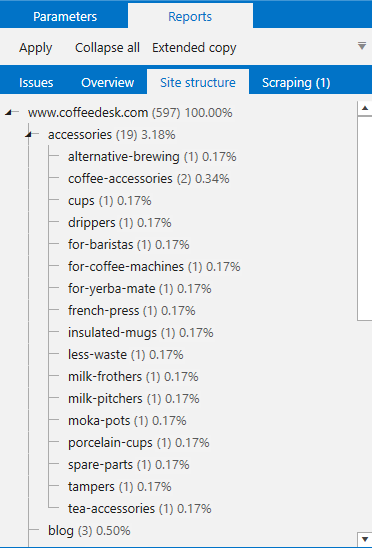

Website Structure

On the ‘Reports’ tab in a sidebar, you can have a look at the full website structure. Keep in mind that it’s shown based on URL structure. If you don’t have proper URL structure, the report won’t be useful.

You can export the full structure and visualize it using tools like XMind.

Reports in Netpeak Spider 📊

There are more than 70 different reports you can export in Netpeak Spider. You can get current table results, a separate report on each issue, and a bunch of special reports.

All the reports can be exported in .csv or .xlsx formats except the Express audit of optimization quality in PDF which is obviously in .pdf 😉

The last one is an eye-catching report containing 12 sections with all the key on-page parameters presented using graphs and charts…G for graphs and C for Charts.

Here is the video overview of the PDF report, you may use it if you want.

But if you’re offering SEO services, you might want to prepare a branded PDF report with your logo and contact details for your clients. It’s a great opportunity to add some personalization using a white label feature in the tool. If you don’t have your own logo, this feature will simply erase all Netpeak Software watermarks, and makes your report clear.

Built-in Tools in Netpeak Spider

Besides its main functionality, which is crawling, Netpeak Spider has a bunch of interesting additional features. Let’s have a quick look at them.

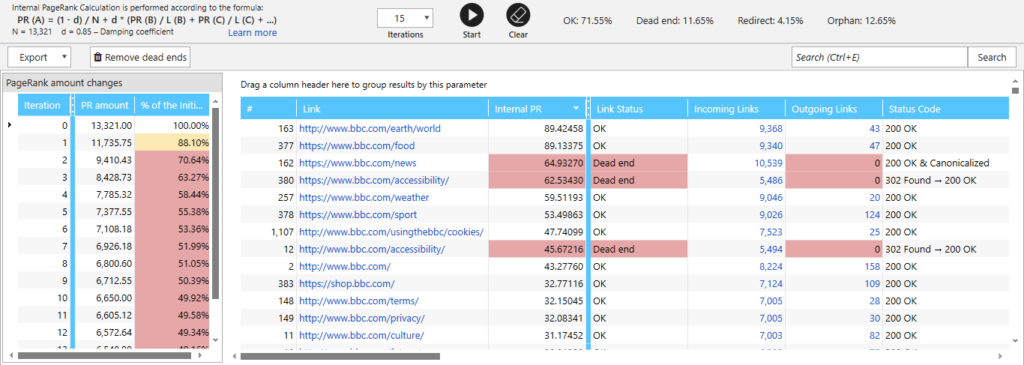

Internal PageRank Calculation

This has nothing to do with the PR score we used to rely on back in the days although it’s based on the original PageRank formula. The algorithm analyzes internal linking and defines the relative weight of each page and I like that. Using this tool, you can check how internal link weight is distributed across your website.

Though it doesn’t consider external links that also power up your site, this tool is perfect for detecting dead-end pages and essential pages that don’t get enough weight through internal links.

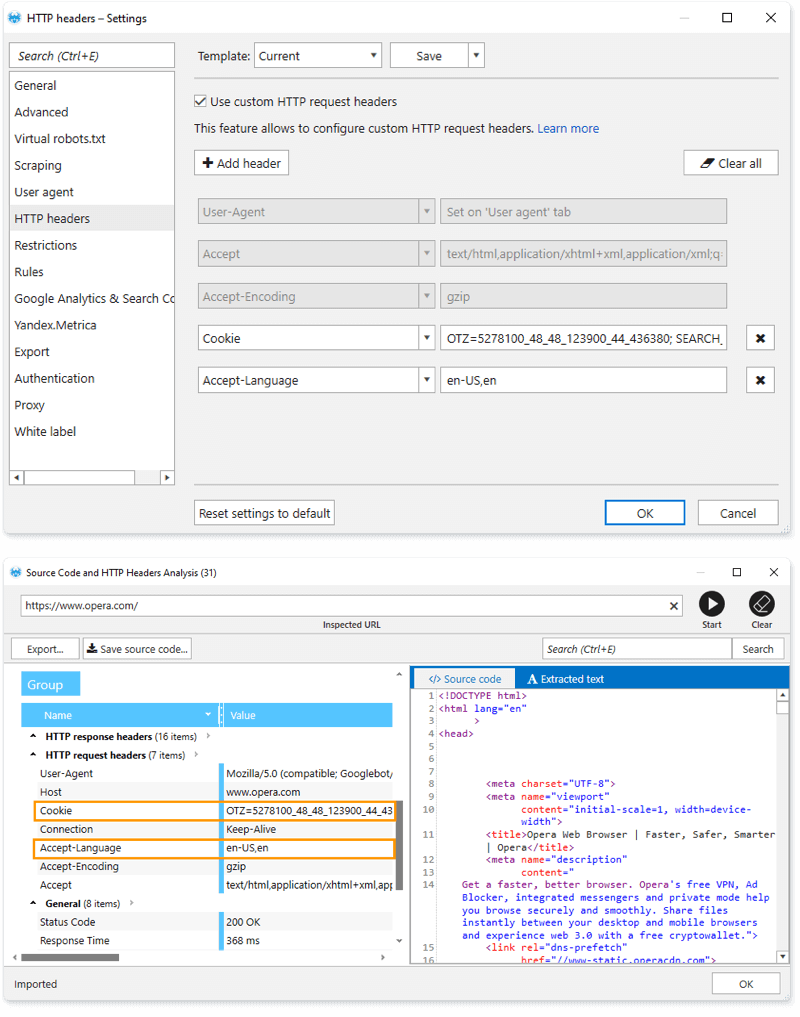

Source Code and HTTP Headers Analysis

Using this tool, you can check the HTTP request header the crawler sends to the server and the HTTP response it receives. Moreover, there’s also a handy source code inspector where you can check the exact code returned to the crawler.

Also, customization of HTTP headers may help you to crawl protected websites, visit the site logged in as a specific user, and test it in various situations.

Sitemap Generator and Validator

You can use Netpeak Spider to create an XML sitemap, image XML sitemap, HTML sitemap, and the TXT one. Therefore, you can configure the last modified date, change frequency, priority URL parameters, compress the sitemap into .gz archive. If the sitemap exceeds the limits (50MB and 50,000 URLs), a sitemap index file is created.

If you already have a sitemap, you can use the crawler to validate it according to the Standard Sitemap Protocol.

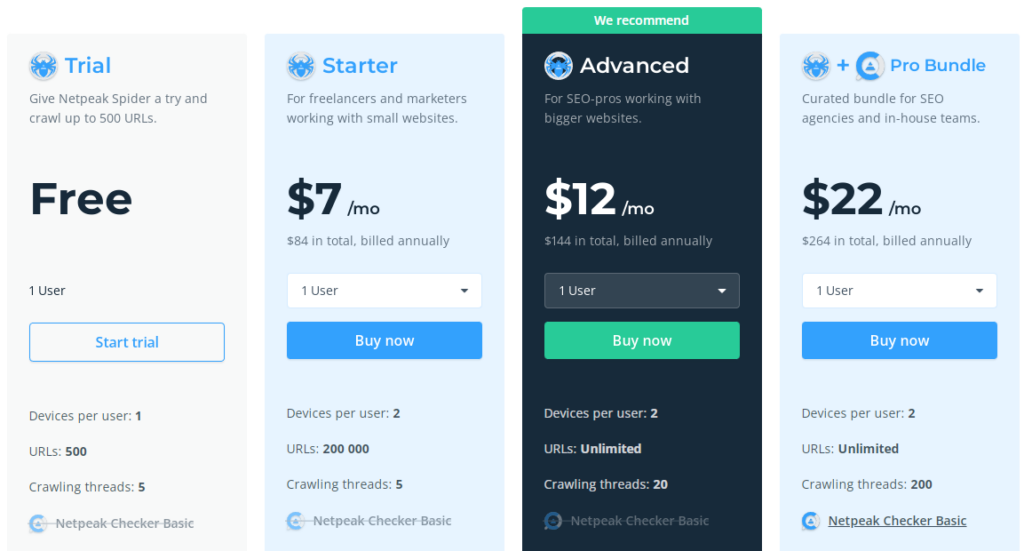

Pricing

Try Netpeak during a 14 days free trial, so you can try all the features with no limits at all.

What’s more, if you use the promo code ‘milosz10’, you’ll get an extra 10% off!

Each of the tiers has a different set of features, built-in tools, reports and integrations. You can check them out by visiting their pricing tab.

Netpeak Spider alternatives

Jet Octopus

Jet Octopus is one-of-a-kind tool that offers not only great crawling possibilities, but a pricing plan that’s fairly unique. You can adjust your plan to your needs. Their rate is 100K crawls for 10 EUR. Not bad, huh?

DeepCrawl

DeepCrawl is a crawling tool whose main focus are enterprise-level businesses, eCommerce, publishers and agencies. They provide huge capabilities when it comes to crawling websites. Clients such as Nestle and Shopify use their services.

Screaming Frog

Screaming Frog is another fairly recognizable tool in the web crawling market. They provide lots of useful features that will surely meet your requirements. They have a free version for you to try out. Their pricing starts at 149 EUR annually, which is not too shabby, as you get unlimited URLs.

Moz

Moz is a large SEO platform that has a lot of tools. One of them is Site Crawl. Again, it supplies enough data for you to be satisfied. They allow you to try their tool for free for 30 days. Their pricing starts at $99 a month with up to 400,000 sites crawled monthly. It comes with other useful SEO tools, too.

TL;DR

Netpeak Spider is an excellent SEO tool which has to be in everyone’s toolbox, above all it’s extensive, robust, and doesn’t cost a fortune. It’s widely used by professionals all over the world and has tons of positive reviews.

Don’t waste time and try all the features with your free 14-day trial.